- (801) 210-1303

- [email protected]

- Weekdays 9am - 5pm MST

DESCRIPTION

Significantly shortened by 70%, and routine scenario training (such as dish-washing or folding clothes) can be completed in just 2H.

During autonomous tasks, the DOBOT X-Trainer can quickly correct real-time disturbances, like recognizing and removing stains during dish-washing, showcasing advanced AI capabilities.

With a 25Hz end-to-end high-performance motion interface, the response speed is improved by 150% compared to similar products, and it is more stable.

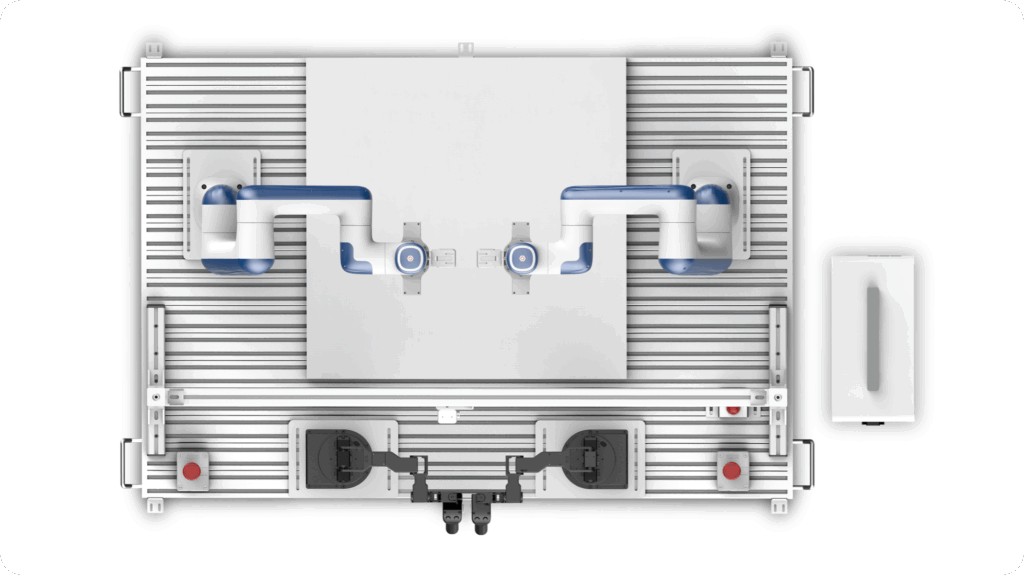

Keeps hand loads under only 400g, uses 3D printed monolithic joints to reduce parts and achieve a lightweight user experience.

The master hand has unlock and record buttons – the unlock enables quick locking/unlocking of the master hand pose to reduce fatigue, while the record button allows convenient control of data capture.

Features spring-assisted chassis, high-performance servos for precise motion, and an optimized primary manipulator for intuitive teleoperation – reducing user fatigue.

Achieves ±0.05mm repeat positioning accuracy, enabling precise experimental operations and sensitive data collection tasks.

Offers a large 625mm reach per arm, expandable to 1200mm in dual-arm mode, with 6-axis flexibility for broad workspace coverage and diverse data collection.

Delivers 2kg single-arm and 3kg dual-arm payload capacities, covering diverse applications, with a top speed of 1.6m/s for efficient high-speed data collection.

Includes auto de-sync of master-slave arms on power loss/alarms, master hand drop detection, and low-speed master-slave sync to prevent collisions.

The DOBOT X-Trainer’s Nova2 cobot has 5-level collision detection and ISO15066 certification for safe human-robot interaction.

The work-space is padded with flexible EVA foam, effectively cushioning against accidental drops of the end-effector and minimizing damage risk to the gripper and arm.

DOBOT X-Trainer provides a complete SDK covering the full data collection, model training, and autonomous inference workflow, with detailed documentation to guide users systematically through the entire data processing pipeline, greatly simplifying the learning and application process.

Engineered with open APIs for accessing key data like master/slave joint angles, gripper control, camera RGB/depth – enabling flexible integration with custom AI models and research projects. The SDK also supports exporting specialized data formats for direct use with domain-specific neural networks.

Funding goes to those who plan ahead, so add to your wishlist and submit your quote request.